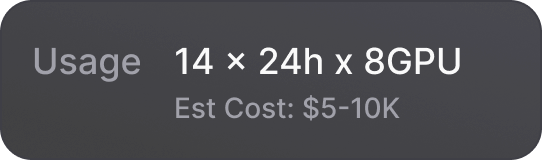

Run large scale GPU workloads on-demand

Submit jobs with simple YAML files while we handle networking, scaling, and issue resolution.

Up & running in minutes, zero code changes

.png)

Built for zero downtime, engineered for scale

Only pay when your code is running

ML Infrastructure That Just Works

Frequently Asked Questions.

Submitting jobs in Trainy’s platform is done via a simple yaml file that can work across clouds. You just need to enter your existing torchrun or equivalent launch command and our platform handles the rest. Read our docs for more details.

No. For most of our customers, we help them pick a cloud provider offering that makes the most sense for their specific use case. We then assist with hardware validation to ensure they are getting the promised performance. If you already have a reserved GPU cluster, our solution can be deployed in the cloud or on-prem. For startups, we can help you go from cloud credits to a functional multinode training setup with high bandwidth networking in < 20 mins.

Most Trainy customers use a hybrid of both on-demand and reserved clusters. For inference servers and dev boxes, it generally makes sense for an AI team to have a couple annually reserved GPU instances. For large-scale training workloads, on-demand allows you to burst out to larger scale at a lower cost. As AI work is quite bursty by nature, teams use on-demand to reduce GPU spend.

Kubernetes gives AI teams higher ROI on the same pool of compute. All top-tier AI research teams (OpenAI, Meta, etc.) have similar systems in place. With automated scheduling and cleanup of queued workloads, AI engineers never have to worry about GPU availability or compatibility. On the other hand, decision makers get improved visibility and control into their team’s cluster usage and can make informed purchasing decisions.

Trainy offers all of the resource sharing and scheduling benefits of Slurm with much more. Teams get better workload isolation via containerization, integrated observability, and improved robustness with comprehensive health monitoring.

The first step to reducing GPU spend is cutting idle time. If you have a reserved cluster, this means having a fault-tolerant scheduler in place. A scheduler allows your team to maintain a workload queue and keep your GPUs busy 24/7, while fault-tolerance ensures that GPU failures do not require manual restarts. New and restarted workloads are placed on healthy nodes — even if they fail in the middle of the night. Once idle time has been minimized, step 2 is to look at your workload efficiency. The advanced performance metrics visible in Trainy’s platform make it easy to determine how well a workload has been optimized.

Most Trainy customers stream data into their GPU cluster from object store such as Cloudflare R2. In the longer term, we are looking at distributed file system integrations, but this does not exist today.

We can give your team access to multiple K8s clusters corresponding to different clouds, but jobs are submitted to one cluster at a time.

The earlier, the better. When your company is exploring gen AI applications, on-demand clusters are a cost effective way to run large scale experiments. When the time comes to choose a cloud provider, we work with you to navigate cloud provider offerings, and ensure you are getting maximum performance.